The Dawn of Computing: Early Processor Beginnings

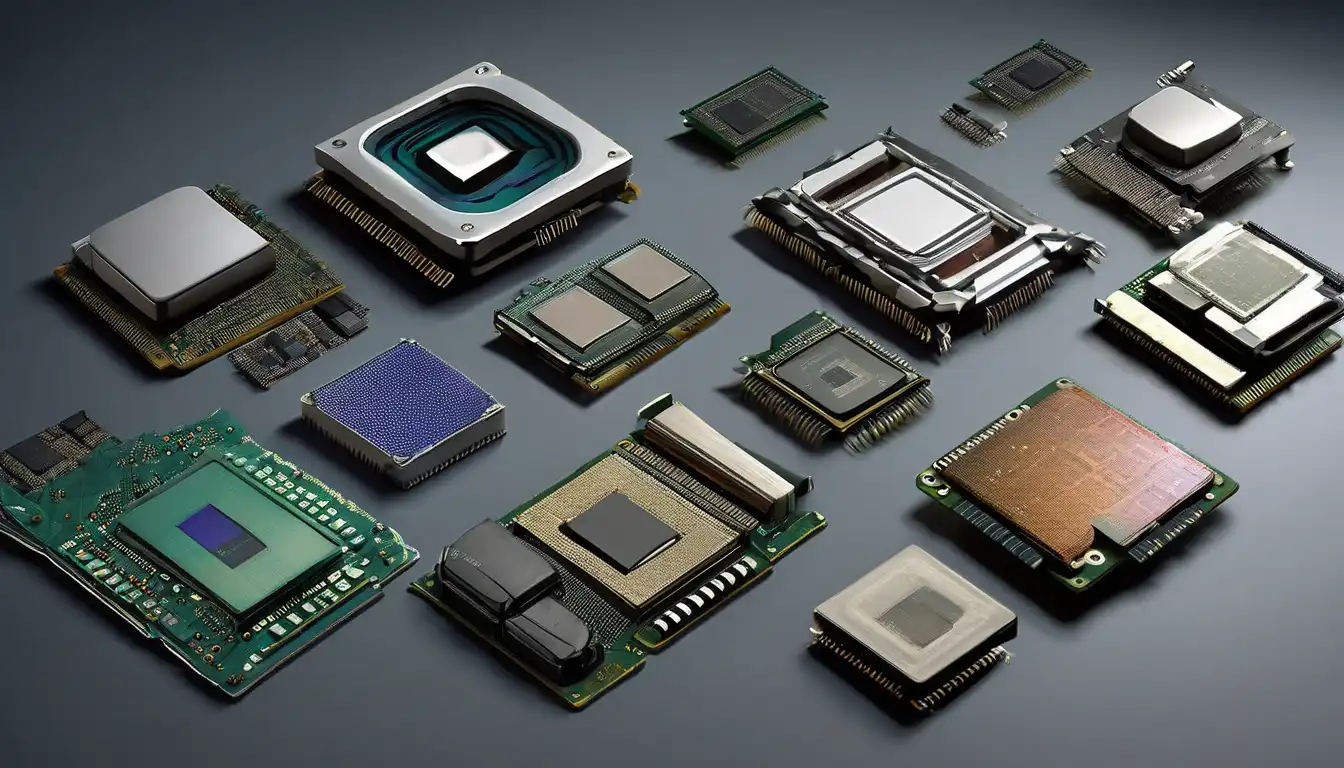

The evolution of computer processors represents one of the most remarkable technological journeys in human history. Beginning with massive vacuum tube systems that occupied entire rooms, processors have transformed into microscopic marvels capable of billions of calculations per second. This transformation didn't happen overnight—it unfolded through decades of innovation, each generation building upon the last to create increasingly powerful and efficient computing devices.

In the 1940s and 1950s, the first electronic computers used vacuum tubes as their primary processing components. These early processors were enormous, power-hungry, and prone to frequent failures. The ENIAC, one of the first general-purpose electronic computers, contained approximately 17,000 vacuum tubes and consumed 150 kilowatts of electricity—enough to power a small neighborhood. Despite their limitations, these early systems laid the foundation for modern computing and demonstrated the potential of electronic data processing.

The Transistor Revolution

The invention of the transistor in 1947 marked a pivotal moment in processor evolution. These solid-state devices were smaller, more reliable, and consumed significantly less power than vacuum tubes. By the late 1950s, transistors had largely replaced vacuum tubes in new computer designs, enabling the development of smaller, more affordable computers. The IBM 1401, introduced in 1959, became one of the first commercially successful transistor-based computers, bringing computing power to businesses worldwide.

Transistor technology continued to improve throughout the 1960s, with manufacturers finding ways to make transistors smaller and more efficient. This period saw the development of early integrated circuits, where multiple transistors were fabricated on a single piece of semiconductor material. These advances paved the way for the next major breakthrough in processor technology.

The Integrated Circuit Era

The 1970s witnessed the rise of the microprocessor—a complete central processing unit on a single chip. Intel's 4004, released in 1971, contained 2,300 transistors and operated at 740 kHz. While primitive by today's standards, the 4004 demonstrated that complex processing capabilities could be integrated into a single, affordable component. This innovation made personal computing possible and set the stage for the digital revolution.

Throughout the 1970s and 1980s, microprocessor technology advanced rapidly. The Intel 8080 (1974) and Zilog Z80 (1976) powered the first generation of personal computers, while the Motorola 68000 series found its way into early workstations and the original Apple Macintosh. These processors featured increasingly sophisticated architectures, with improved instruction sets and better performance characteristics.

The x86 Architecture Dominance

Intel's 8086 processor, introduced in 1978, established the x86 architecture that would dominate personal computing for decades to come. The IBM PC's adoption of the 8088 (a variant of the 8086) in 1981 cemented x86 as the industry standard. Subsequent generations, including the 80286, 80386, and 80486, brought protected mode operation, 32-bit processing, and integrated math coprocessors to the mainstream.

The 1990s saw intense competition between Intel and AMD, driving rapid innovation in processor design. The Pentium processor family introduced superscalar architecture, allowing multiple instructions to be executed simultaneously. Clock speeds increased from tens of megahertz to hundreds of megahertz, while transistor counts grew from millions to tens of millions. This period also saw the emergence of reduced instruction set computing (RISC) architectures in workstations and servers, offering alternative approaches to processor design.

The Multi-Core Revolution

As processor clock speeds approached physical limits in the early 2000s, manufacturers shifted focus to multi-core designs. Instead of making single cores faster, they began integrating multiple processing cores on a single chip. Intel's Core 2 Duo (2006) and AMD's Athlon 64 X2 demonstrated the performance benefits of parallel processing, enabling computers to handle multiple tasks simultaneously more efficiently.

The transition to multi-core processors represented a fundamental shift in computing philosophy. Software developers had to learn to write parallel code to take full advantage of these new architectures. Operating systems became more sophisticated at distributing workloads across available cores, while processor manufacturers developed increasingly complex cache hierarchies and memory controllers to keep multiple cores fed with data.

Specialized Processing and Heterogeneous Computing

Recent years have seen the rise of specialized processing units designed for specific workloads. Graphics processing units (GPUs) have evolved from simple display controllers to massively parallel processors capable of handling complex computational tasks. The integration of GPU cores alongside traditional CPU cores in system-on-chip (SoC) designs has enabled new levels of performance and efficiency in mobile devices and embedded systems.

Modern processors also incorporate specialized units for artificial intelligence, machine learning, and cryptography. Apple's M-series chips, for example, include neural processing units specifically optimized for AI workloads. This trend toward heterogeneous computing—combining different types of processing units optimized for specific tasks—represents the current frontier of processor evolution.

Future Directions: Quantum and Neuromorphic Computing

Looking ahead, processor technology continues to evolve in exciting new directions. Quantum computing promises to solve problems that are intractable for classical computers, using quantum bits (qubits) that can exist in multiple states simultaneously. While still in its early stages, quantum processor development has seen significant progress from companies like IBM, Google, and D-Wave.

Neuromorphic computing represents another promising avenue, with processors designed to mimic the structure and function of the human brain. These systems could enable more efficient pattern recognition and sensory processing, potentially revolutionizing artificial intelligence applications. Companies like Intel and IBM are actively developing neuromorphic chips that could power the next generation of intelligent systems.

The evolution of computer processors has been characterized by continuous innovation and paradigm shifts. From vacuum tubes to quantum bits, each generation has built upon the lessons of the previous one while introducing fundamentally new approaches to computation. As we look to the future, it's clear that processor technology will continue to evolve, enabling new applications and capabilities that we can only begin to imagine.

For more information on related topics, check out our articles on computer architecture fundamentals and emerging computing technologies. Understanding the history of processor development provides valuable context for appreciating current technology trends and anticipating future innovations in the computing landscape.